Get Spark from the downloads page of the project website. This documentation is for Spark version 3.1.2. Spark uses Hadoop’s client libraries for HDFS and YARN. Downloads are pre-packaged for a handful of popular Hadoop versions. Users can also download a “Hadoop free” binary and run Spark with any Hadoop version by augmenting Spark’s. Download a release now! Get Pig.rpm or.deb. Starting with Pig 0.12, Pig will no longer publish.rpm or.deb artifacts as part of its release. Apache Bigtop provides.rpm and.deb artifacts for Hadoop, Pig, and other Hadoop related. Download the Hadoop KEYS file. Gpg –import KEYS; gpg –verify hadoop-X.Y.Z-src.tar.gz.asc; To perform a quick check using SHA-512. Mac OS X El Capitan 10.11.6 Intel USB Install Liberated Free Download Most recent For Macintosh. Oct 23, 2018 How to Install Hadoop on Mac. Now let’s move further to the procedure of installation of Hadoop on Mac OS X. Installing Hadoop on Mac is not as simple as typing a single line command in Terminal to perform an action. It requires a mix of knowledge, concentration, and patience. However, you don’t need to worry about not knowing everything.

- Download Hadoop For Mac Os

- Apache Hadoop Download For Windows

- Installing Hadoop In Mac

- Download Hadoop For Mac 2020

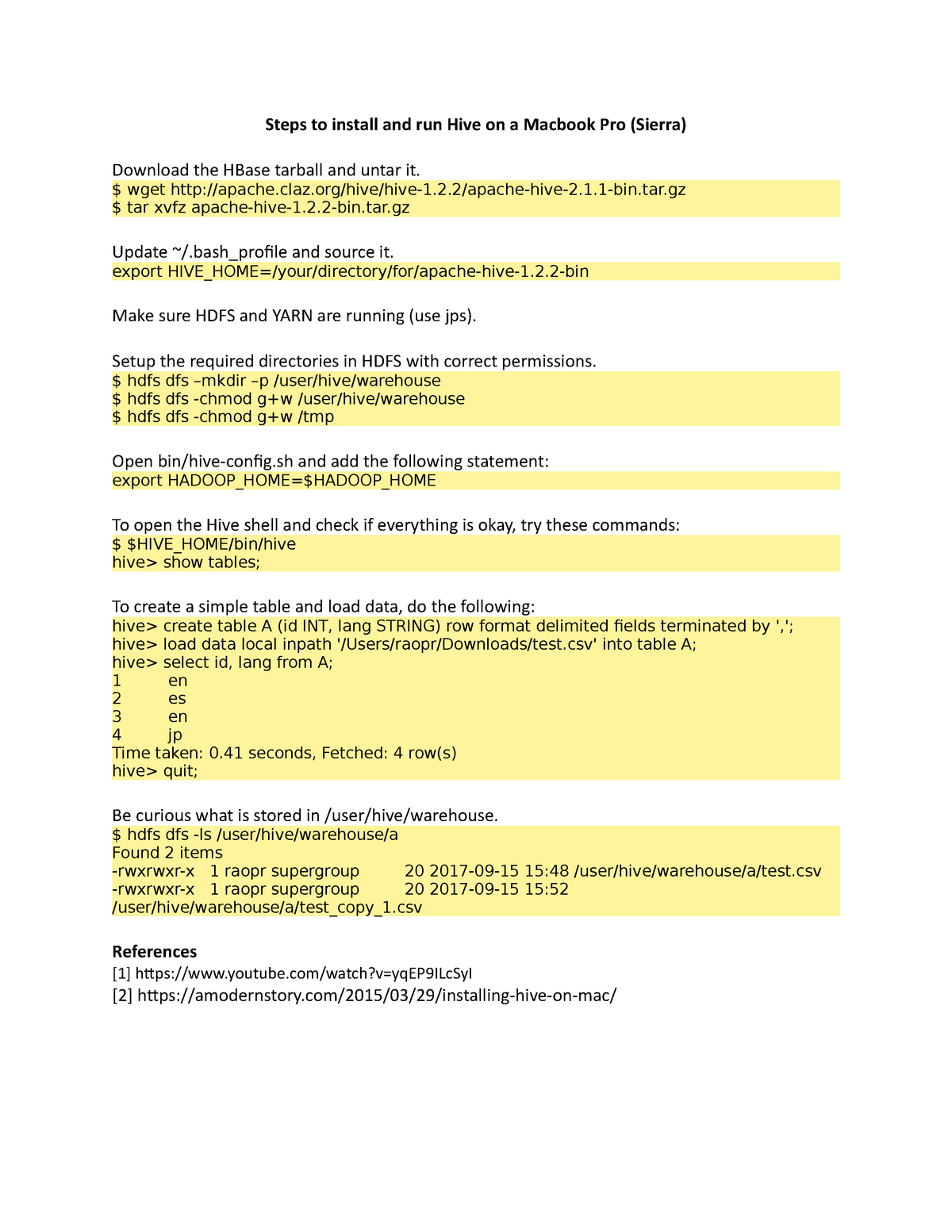

Download Apache Hadoop You can click here to download apache Hadoop 3.0.3 version or go to Apache site to download directly from there. Move the downloaded Hadoop. Apache Hive was first developed as a Apache Hadoop sub-project for providing Hadoop administrators with an easy to use, proficient query language for their data. Because of this, Hive was developed from the start to work with huge amounts of information for each query and is perfectly adapted for large scale databases and business environments.

This topicexplains how to install the Hortonworks driver for Apache Hive, which is a fully compliant ODBCdriver that supports multiple Hadoop distributions.

KornShell (ksh) must be installed on the Unica Campaign listener (analytic) server.

Procedure

Download Hadoop For Windows 10

- Obtain the 64-bit version of the Hortonworks Hive ODBC driver: http://hortonworks.com/hdp/addons

- Install the Hortonworks ODBC driver on the Unica Campaign listener (analytic server):

rpm -ivh hive-odbc-native-2.0.5.1005-1.el6.x86_64.rpm

The default installation location of the Hortonworks Hive ODBC driver is/usr/lib/hive/lib/native.

For more information on installing the Hortonworks Hive ODBC driver see: http://hortonworks.com/wp-content/uploads/2015/10/Hortonworks-Hive-ODBC-Driver-User-Guide.pdf.

- Follow the prompts to complete the installation.

Here are the step by step instructions to install the plain vanilla Apache Hadoop and run a rudimentary map reduce job on the mac osx operating system.

There are excellent tutorials and instructions to do this on the web. Two in particular are the one from apache and another from Michael Noll. These two resources are more catered towards a linux/ubuntu flavored audience.

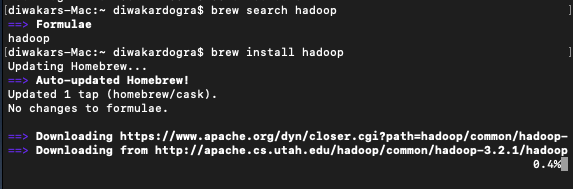

Before you begin, make sure that you have Java 6 or better installed on your mac.You can download the hadoop distro from the apache site, but the preferred way to download it is using homebrew on mac. The version I downloaded using brew was hadoop 1.2.1, the most current stable hadoop release. Its as easy as typing the command “brew install hadoop”. It will be downloaded and placed under /usr/local/Cellar by default in a directory called hadoop.

There are 3 modes of running hadoop — stand alone, pseudo distributed and fully distributed. The standalone is fairly straightforward and by following the apache page you can easily run it. Setting up a fully distributed cluster is pretty complex and beyond the scope of this introductory blog on hadoop. The setup that we are going to look is the pseudo distributed mode which is particularly useful as a decent development environment. These instructions are a hybrid of those found on the Apache Hadoop site and the Hadoop chapter in the Spring Data book.

Pseudo distributed mode is basically a single node installation of hadoop, but each individual hadoop daemon runs in individual java processes and thus pseudo distributed.

The stock config files needed for a distributed hadoop installation come empty and you need to populate them with the following. This is well documented on the Apache site and the following is a repeat of what you find there.

conf/core-site.xml:

conf/hdfs-site.xml:

conf/mapred-site.xml:

Next you need to make sure that you can do a passphraseless ssh to localhost. Try the following on a terminal window.

You might hit with two issues. First, it may be likely that your sshd (daemon process for ssh) is not running. In that case, you have to go to System Preferences / Sharing / enable remote login. Make sure that only local users on your system are allowed to ssh to your mac.

Another issue is that your key is not added to the known ssh hosts and it prompts for a passphrase. To avoid this, execute the following two commands on the terminal.

Now you should be able to ssh into localhost.

Download Hadoop For Mac Os

Since this is your first installation of hadoop you need to format the hadoop filesystem, HDFS. If you already have work under way in HDFS, don’t format HDFS, else you would loose all the data.

Go to where hadoop distribution is installed. If you have done it through brew and downloaded the current latest, it is under /usr/local/Cellar/hadoop/1.2.1.

What Is Hadoop

Now start all the hadoop daemons:

Once everything is started, you can verify them in two ways:

It should print out all the different hadoop daemon processes just started.

You can also inspect the NameNode, JobTracker and TaskTracker web interfaces at the following three URL’s respectively.

Next open a different terminal tab and create a directory in /tmp/gutenberg/download. Project Gutenberg conveniently makes a lot of pre copyright era books available which is convenient for the wordcount map reduce job that is going to run on Hadoop.

Apache Hadoop Download For Windows

On the Mac, you can use the curl command to download the file like the following:

This would download a file called pg4363.txt

Next we need to bring this file into HDFS using the hadoop dfs command.

The following command assumes that you have downloaded and installed hadoop using brew or have the hadoop executable directory is on your system path.

We can confirm the file is copied to HDFS by using the following command.

Hadoop distribution comes with a jar file using which we can execute various map reduce jobs. We are going to execute the word count job on this file that we just copied into HDFS.

Hadoop Client Download

Installing Hadoop In Mac

This would produce a lot of outputs on the terminal from the logging of the map reduce job.

Download Hadoop For Mac 2020

Install Apache Hadoop On Windows

Once completed you can go to the jobtracker UI at http://localhost:50030/ to see the job that was just run.

The output was generated in the directory /user/gutenberg/output.

We are particularly interested in the file part-r-00000 where the actual output was written. Bluestacks 5 mac os update. We can see the contents of the file like the following:

Or we can bring this file to our local file system as shown below:

Hadoop Tutorial

Make sure that you give the whole HDFS output directory as input for the local file system move as it needs to combine files in case of multiple output files.

Apache Hadoop Download For Windows

Thats it!! We have successfully run a simple word count map reduce job on a single node pseudo distributed cluster of hadoop!!